We would like to invite you to participate in a project to play and record some Holiday music. The idea is that we would make recordings using the equipment that you already have and I would edit them into a video that would be streamed online sometime in December.

There are two ways to participate:

- attend our online rehearsals on Jamulus (if you are not participating already)

or

- record your part at home, using your phone, listening to the accompaniment track on the headphones

For those participating in the online rehearsal, we would record you during the rehearsal. Those who'd like to record by themselves would do that on their own.

We would really love to have as many of you join as possible! I understand that online rehearsals might be difficult to attend, but recording your part by yourself might be easier.

For the recording the only needed equipment would be your phone to record yourself, and another device (another phone/computer/tablet) with headphones to play the accompaniment back.

Here is a tentative list of pieces:

If you are already participating in online rehearsals, Don will contact you (or has already contacted you) to deliver sheet music. If you'd like to join, either for online rehearsal, or just to record yourself, please send email to Don (please do not simply reply to this email, otherwise we might lose track of who's in and who's not).

I would love for everyone interested to participate, if possible, and share some Holiday spirit in these challenging times.

Take care,

Marcin

your most virtual stick-waver

There are two ways to participate:

- attend our online rehearsals on Jamulus (if you are not participating already)

or

- record your part at home, using your phone, listening to the accompaniment track on the headphones

For those participating in the online rehearsal, we would record you during the rehearsal. Those who'd like to record by themselves would do that on their own.

We would really love to have as many of you join as possible! I understand that online rehearsals might be difficult to attend, but recording your part by yourself might be easier.

For the recording the only needed equipment would be your phone to record yourself, and another device (another phone/computer/tablet) with headphones to play the accompaniment back.

Here is a tentative list of pieces:

- Christmas Eve in Sarajevo

- God Rest Ye Merry, Gentlemen

- What Child is this

- Joy To The World

- O Holy Night (optional)

- We Wish You a Merry Christmas

If you are already participating in online rehearsals, Don will contact you (or has already contacted you) to deliver sheet music. If you'd like to join, either for online rehearsal, or just to record yourself, please send email to Don (please do not simply reply to this email, otherwise we might lose track of who's in and who's not).

I would love for everyone interested to participate, if possible, and share some Holiday spirit in these challenging times.

Take care,

Marcin

your most virtual stick-waver

Rehearsing remotely over the internet with a low latency system

Marcin Pączkowski, [email protected] | http://marcinpaczkowski.com

Postdoctoral Scholar, Department of Digital Arts and Experimental Media, University of Washington

Conductor, Evergreen Community Orchestra

This is a draft of a paper. In the current form the references are incomplete, the data needs more research, and more proofreading is necessary. However, I am making this public hoping that it helps other musicians in facilitating musical collaboration during the pandemic. I am open to any comments you might have. The video documenting a recent remote rehearsal can be found here.

Postdoctoral Scholar, Department of Digital Arts and Experimental Media, University of Washington

Conductor, Evergreen Community Orchestra

This is a draft of a paper. In the current form the references are incomplete, the data needs more research, and more proofreading is necessary. However, I am making this public hoping that it helps other musicians in facilitating musical collaboration during the pandemic. I am open to any comments you might have. The video documenting a recent remote rehearsal can be found here.

Introduction

This paper is meant to document the up-to-date experiments on rehearsing remotely, over the internet, with a medium-size instrumental ensemble. This scenario arose due to the limitations of person-to-person contact as a precaution against the spread of COVID-19. Practical approach is described with the current results and future goals.

The desire to play together have been expressed in numerous situations since the pandemic started. The author has set up specialized systems for musical collaboration at the Department of Digital Arts and Experimental Media (DXARTS) at the University of Washington, where regular rehearsals of experimental music groups have been happening over the period of last few months. After mentioning such system to the members of the Evergreen Community Orchestra, the members became very interested and enthusiastic about the possibility of working on music together again, as it turned out that the limitations of the in-person gatherings will last for many months.

A number of low-latency systems aimed at musical collaboration over the internet have been developed so far: Audio over OSC (AoO), JackTrip, JamKazam, Jamulus, Ninjam, SoundJack, and others. At the DXARTS department an elaborate system has been set up using JackTrip, enabling flexible mixing, routing, uncompressed multichannel audio transmission and seamless recording. Musicians are using a dedicated sound cards and external microphones. Setting up this system with everyone required quite a bit of trial and error. Moreover, during the rehearsal the system itself needs to be centrally controlled in terms of acoustic mix for every musician. It was not practical to use it for a larger group, where the author needed to focus on leading the actual rehearsal. After testing a number of the platforms mentioned earlier, Jamulus seemed to provide the best balance between low-latency performance, ease of setup, ease of use, and functionality. Particularly, the ability for every player to control their own mix of instruments is crucial in this case.

The desire to play together have been expressed in numerous situations since the pandemic started. The author has set up specialized systems for musical collaboration at the Department of Digital Arts and Experimental Media (DXARTS) at the University of Washington, where regular rehearsals of experimental music groups have been happening over the period of last few months. After mentioning such system to the members of the Evergreen Community Orchestra, the members became very interested and enthusiastic about the possibility of working on music together again, as it turned out that the limitations of the in-person gatherings will last for many months.

A number of low-latency systems aimed at musical collaboration over the internet have been developed so far: Audio over OSC (AoO), JackTrip, JamKazam, Jamulus, Ninjam, SoundJack, and others. At the DXARTS department an elaborate system has been set up using JackTrip, enabling flexible mixing, routing, uncompressed multichannel audio transmission and seamless recording. Musicians are using a dedicated sound cards and external microphones. Setting up this system with everyone required quite a bit of trial and error. Moreover, during the rehearsal the system itself needs to be centrally controlled in terms of acoustic mix for every musician. It was not practical to use it for a larger group, where the author needed to focus on leading the actual rehearsal. After testing a number of the platforms mentioned earlier, Jamulus seemed to provide the best balance between low-latency performance, ease of setup, ease of use, and functionality. Particularly, the ability for every player to control their own mix of instruments is crucial in this case.

Latency in acoustic and amplified music

Latency, for the purpose of this paper used interchangeably with the word "delay", is the time that passes between the sound leaving its source (i.e. an instrument) and arriving at its destination (i.e. human ears). Research exists describing various aspects of latency and its perception. My point here is to make these considerations more relatable for musicians, by highlighting the relation between latencies experienced in various situations. Please be advised that following calculations focus on relating with experiences that might be familiar to many readers, at the cost of accuracy. The word "about" should be placed in front of all following numbers and will thus be omitted.

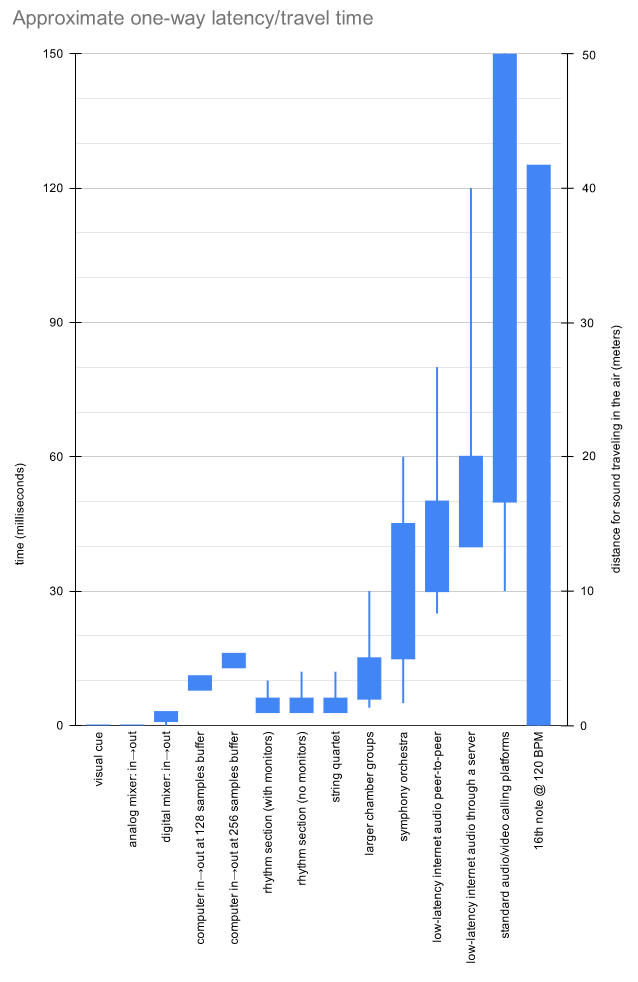

In the air, sound waves take 3 milliseconds (3 ms, three thousandths of a second) to travel 1 meter (1 m), or 3 feet. What does that mean in practice? Adjacent players in a typical string quartet setting hear each other with 3-5 ms delay (spaced by 1-1.5 m), but the outermost players hear each other with 6-9 ms delay (spaced by 2-3 m). In other chamber music configurations these distances, and thus the delays, might be slightly larger, maybe up to 12-15 ms (4-5 m of distance), depending on the type of the group. With larger ensembles, these delays naturally increase. Let us consider a symphony orchestra and a hypothetical duo of 4th French Horn playing together with a Tuba. These instruments in a typical arrangement would be positioned on the opposite side of the stage, at least 15 m apart, which results in 45 ms of a delay. Similar (or longer) delays occur between the first stand of string section and the brass section. Of course, large ensembles typically play with a conductor, who provides additional visual cue, aiding the synchronization. Light travels 1,000,000 times faster than sound so the delay of visual cue itself is negligible. Moreover, the hypothetical duo would typically play in relation to other instruments, which would help in perception of tempo and synchronicity. Nonetheless, the delay between the two instruments alone would be considerable.

Timing is particularly crucial in styles of music that typically employ a rhythm section (drums, bass, guitar and/or piano). On a small club's stage, the drummer and the bassist are typically not farther than 1-2 m from each other (3-6 ms delay, assuming no stage monitors), similarly with piano or guitar. It is interesting however, if one considers the three instruments (drums, bass, piano) to be oriented in a line, with the bass player in the middle. If the musicians are 2 m apart, the bassist hears both piano and drums with 6ms delay, but the pianist hears drums (and vice versa) with 12 ms delay. Solo instruments might be positioned even farther, increasing the delay between them and the rhythm section. It is worth noting however, that music featuring rhythm section often utilizes amplification, and thus stage monitoring. With the use of stage monitoring the latencies are likely to be "normalized", because musicians are typically positioned within a similar distance from their respective monitors, and the latency of audio transmission, if present, is consistent between all the musicians. In that case the delay should be in the 3-10 ms range, after adding the distance between the instrument and the microphone (typically below 1 m), the distance between the stage monitor and the musician (1-2 m), and, in case of digital mixing consoles, in-to-out latency of the audio system (usually below 3 ms).

How does "X" ms of latency sound? To put these numbers in perspective, let us consider the musical tempo. With quarter note at 120 beats per minute (BPM), or 2 beats per second, a single 16th note lasts 125 ms. The larger delays mentioned before (e.g. between distanced members of the orchestra) are on the order of 1/3rd of the length of that 16th note.

It is worth noting, that musicians use a multitude of cues for synchronization while performing. Depending on the style of music, size of the ensemble, acoustic circumstances, presence or lack of amplification/monitoring, visual cues may complement to a varying degree the multitude of already present acoustic cues. Additionally, room reverberation "smears" the perceived latency, as multiple copies of the same sound, delayed by slightly different amount, arrive at the musicians' ears.

In the air, sound waves take 3 milliseconds (3 ms, three thousandths of a second) to travel 1 meter (1 m), or 3 feet. What does that mean in practice? Adjacent players in a typical string quartet setting hear each other with 3-5 ms delay (spaced by 1-1.5 m), but the outermost players hear each other with 6-9 ms delay (spaced by 2-3 m). In other chamber music configurations these distances, and thus the delays, might be slightly larger, maybe up to 12-15 ms (4-5 m of distance), depending on the type of the group. With larger ensembles, these delays naturally increase. Let us consider a symphony orchestra and a hypothetical duo of 4th French Horn playing together with a Tuba. These instruments in a typical arrangement would be positioned on the opposite side of the stage, at least 15 m apart, which results in 45 ms of a delay. Similar (or longer) delays occur between the first stand of string section and the brass section. Of course, large ensembles typically play with a conductor, who provides additional visual cue, aiding the synchronization. Light travels 1,000,000 times faster than sound so the delay of visual cue itself is negligible. Moreover, the hypothetical duo would typically play in relation to other instruments, which would help in perception of tempo and synchronicity. Nonetheless, the delay between the two instruments alone would be considerable.

Timing is particularly crucial in styles of music that typically employ a rhythm section (drums, bass, guitar and/or piano). On a small club's stage, the drummer and the bassist are typically not farther than 1-2 m from each other (3-6 ms delay, assuming no stage monitors), similarly with piano or guitar. It is interesting however, if one considers the three instruments (drums, bass, piano) to be oriented in a line, with the bass player in the middle. If the musicians are 2 m apart, the bassist hears both piano and drums with 6ms delay, but the pianist hears drums (and vice versa) with 12 ms delay. Solo instruments might be positioned even farther, increasing the delay between them and the rhythm section. It is worth noting however, that music featuring rhythm section often utilizes amplification, and thus stage monitoring. With the use of stage monitoring the latencies are likely to be "normalized", because musicians are typically positioned within a similar distance from their respective monitors, and the latency of audio transmission, if present, is consistent between all the musicians. In that case the delay should be in the 3-10 ms range, after adding the distance between the instrument and the microphone (typically below 1 m), the distance between the stage monitor and the musician (1-2 m), and, in case of digital mixing consoles, in-to-out latency of the audio system (usually below 3 ms).

How does "X" ms of latency sound? To put these numbers in perspective, let us consider the musical tempo. With quarter note at 120 beats per minute (BPM), or 2 beats per second, a single 16th note lasts 125 ms. The larger delays mentioned before (e.g. between distanced members of the orchestra) are on the order of 1/3rd of the length of that 16th note.

It is worth noting, that musicians use a multitude of cues for synchronization while performing. Depending on the style of music, size of the ensemble, acoustic circumstances, presence or lack of amplification/monitoring, visual cues may complement to a varying degree the multitude of already present acoustic cues. Additionally, room reverberation "smears" the perceived latency, as multiple copies of the same sound, delayed by slightly different amount, arrive at the musicians' ears.

Latency in computer audio and over the network

The following simplified description of audio processing aims to help understanding the factors contributing to latency in computer audio. Computers process continuous signals in "blocks" of data, stored in and moved between "buffers" (memory locations) while processing. When recoding or processing audio, the sound from an input, driven by e.g. a microphone, reaches the sound card, where it is "sampled" by the analog-to-digital converter (ADC) at the consistent rate, called sampling rate (typically 44100 or 48000 Hz, or times per second) and every "X" number of samples are placed in a buffer and transmitted as a block to the computer, through one of the peripheral connections: USB, PCI-E/Thunderbolt, FireWire, or similar. Further, the data is copied to subsequent place(s) in memory (buffers) to allow processing by the main processor (CPU). On the way "out", the data in computer's memory, containing processed or generated sound, is copied to a buffer before being sent back through the peripheral bus to the sound card, where it is being converted back into electrical signal by the digital-to-analog converter (DAC). Input-to-output latency depends on multiple factors: the audio buffer size is the most important one, however the hardware characteristics, as well as driver type (particularly on Windows operating system) may play a decisive role as well.

The exact tests of audio interfaces are beyond the scope of this paper. The author measured input-to-output latency values on a computer running macOS, equipped with two different external audio interface. For a buffer size of 128 samples the overall latency was in the range of 8-11 ms, and for 256 samples it measured 13-16 ms, depending on the audio device. Based on author's experience and incidental evidence, on Windows computers it is difficult to achieve comparably low latencies using the built-in sound card. However, when using a dedicated external interface, similar latencies are definitely achievable.

As this paper describes the scenario of sending audio data between computers, let us look at the latencies on the computer network. A wired ethernet connection between two computers will typically result in packets arriving within 1 ms between the two devices. For two computers, located in the same city and connected to the internet through a cable modem, it will take approximately 10-20 ms for the network packets to arrive, depending on the quality of the internet service provider. It is worth noting, that the data needs to be "buffered" before being sent through the network. If not enough data is being buffered to accommodate for network packets' timing fluctuation, the receiver might not get the required data on time, i.e. a momentary dropout in the signal will occur. Wireless networks introduce additional latency on the order of few ms, depending on the distance from the access point, but more importantly they typically introduce a noticeable packet loss, which results in degradation of audio signal.

Finally, if the data is to be compressed before being sent over the network, the compression and decompression process introduces additional latency. With the Jamulus system, the Opus codec that is being used adds 5 ms of latency for each compression/decompression step.

When sending audio data over the network, the latency depends on parameters chosen, software protocol and hardware capabilities, stability of internet connection, as well as whether the connection between two computers is direct or through the server. For two computers in the same city it is possible to achieve latencies on the order of 25-30 ms for direct connections, and 50-60 ms for connections through a server. These values are the "best case" scenario and are likely to be worse during internet traffic's "rush hours".

The exact tests of audio interfaces are beyond the scope of this paper. The author measured input-to-output latency values on a computer running macOS, equipped with two different external audio interface. For a buffer size of 128 samples the overall latency was in the range of 8-11 ms, and for 256 samples it measured 13-16 ms, depending on the audio device. Based on author's experience and incidental evidence, on Windows computers it is difficult to achieve comparably low latencies using the built-in sound card. However, when using a dedicated external interface, similar latencies are definitely achievable.

As this paper describes the scenario of sending audio data between computers, let us look at the latencies on the computer network. A wired ethernet connection between two computers will typically result in packets arriving within 1 ms between the two devices. For two computers, located in the same city and connected to the internet through a cable modem, it will take approximately 10-20 ms for the network packets to arrive, depending on the quality of the internet service provider. It is worth noting, that the data needs to be "buffered" before being sent through the network. If not enough data is being buffered to accommodate for network packets' timing fluctuation, the receiver might not get the required data on time, i.e. a momentary dropout in the signal will occur. Wireless networks introduce additional latency on the order of few ms, depending on the distance from the access point, but more importantly they typically introduce a noticeable packet loss, which results in degradation of audio signal.

Finally, if the data is to be compressed before being sent over the network, the compression and decompression process introduces additional latency. With the Jamulus system, the Opus codec that is being used adds 5 ms of latency for each compression/decompression step.

When sending audio data over the network, the latency depends on parameters chosen, software protocol and hardware capabilities, stability of internet connection, as well as whether the connection between two computers is direct or through the server. For two computers in the same city it is possible to achieve latencies on the order of 25-30 ms for direct connections, and 50-60 ms for connections through a server. These values are the "best case" scenario and are likely to be worse during internet traffic's "rush hours".

Online rehearsal setup

Overview

The setup for online rehearsals with the Evergreen Community Orchestra was created with the following objectives in mind:

The resulting sound coming from the musicians was indeed delayed in relation to the click track and the accompaniment. In order to compensate for that, the local monitoring of the accompaniment track has been delayed by 110 ms, which made the impression of relative synchronicity between the accompaniment, the click track, and the musicians' performance.

A video has been prepared to document the most recent rehearsal. While the final mix was adjusted for balance, including an addition of reverb effect, no audio editing has been done to align musicians' performances in time; the timing of their performance reflects the timing of audio arriving at the server. The accompaniment and the click track have been slightly delayed to reminiscent the setup during the rehearsal, from the conductor's perspective. When rehearsing, the musicians were listening to both the accompaniment and the click track, and while the conductor still performs typical directing gestures, they can't be reliably used by musicians for synchronization ("I wave my hands because I like to").

Some specifics for the Jamulus setup are discussed below, however Jamulus documentation provides a more complete source for information in that regard.

- to allow the musicians to rehearse in a group, and thus keep improving on the level of performance,

- to provide an opportunity for people to play together, and

- to use technologies that the musicians already own, to the extent possible.

The resulting sound coming from the musicians was indeed delayed in relation to the click track and the accompaniment. In order to compensate for that, the local monitoring of the accompaniment track has been delayed by 110 ms, which made the impression of relative synchronicity between the accompaniment, the click track, and the musicians' performance.

A video has been prepared to document the most recent rehearsal. While the final mix was adjusted for balance, including an addition of reverb effect, no audio editing has been done to align musicians' performances in time; the timing of their performance reflects the timing of audio arriving at the server. The accompaniment and the click track have been slightly delayed to reminiscent the setup during the rehearsal, from the conductor's perspective. When rehearsing, the musicians were listening to both the accompaniment and the click track, and while the conductor still performs typical directing gestures, they can't be reliably used by musicians for synchronization ("I wave my hands because I like to").

Some specifics for the Jamulus setup are discussed below, however Jamulus documentation provides a more complete source for information in that regard.

Conductor's setup

Hardware:

- main computer with wired ethernet connection

- two screens are optimal in order to have access to both Jamulus mixer as well as accompaniment playback controls

- audio interface

- microphone

- headphones

- another computer for running video conferencing software

- second computer was used in order to 1) save resources of the main computer, needed for audio processing, and 2) provide additional screen for video calling software

- additionally, Jamulus Server was running on a third computer at a different location with a fast internet connection

- Jamulus, for low-latency audio transmission

- Reaper, for playing back the accompaniment along with a click track

- Reaper's ability to control master tempo with a single slider became very useful, allowing to repeat difficult passages at a slower tempo

- JACK audio, for routing signals between Reaper and Jamulus

- SampleTank virtual sampler, used to synthesize piano sounds

- Google Meet, for the video call

- Jamulus Server, running on a dedicated computer, which all the participants connected to

Musicians' setup

Hardware:

The amount of latency introduced by ASIO4All drivers should be low, but couldn't be confirmed by the author. Moreover additional latency was introduced since number of musicians were using WiFi connection.

Musicians' internet connection needed to meet certain criteria. The speed is not as crucial as the stability, and the connection latency. Most cable modems provided reasonable performance, but in at least one case a DSL connection had a prohibitively high latency (over 1 second for packets to travel from the musician to the server), preventing that musician from participating. While connection speed can be easily tested using various online services, connection stability and latency tests are not common and these parameters at the same time become apparent once Jamulus (or another low-latency system) connection is established.

The crucial part of musicians' setup is the ability to control individual mix of all the instruments. Musicians were encouraged to turn up the accompaniment/click track only, and turn down all other instruments.

- laptop

- wired headphones

- bluetooth/wireless headphones can't be used, as they add relatively high and non-deterministic latency of 50-300 ms

- optionally: wired ethernet connection

- optionally: external microphone

- Jamulus

- Google Meet (running in a browser)

- installing Jamulus

- on Windows: installing ASIO4All driver

- setting up user name in Jamulus

- on Windows: selecting proper audio device in ASIO4All

- making sure that musicians plug their headphones in before starting Jamulus

- by default Jamulus starts monitoring input to output upon connecting to the server, which causes feedback if headphones are not used

- connecting to the server and testing sound

The amount of latency introduced by ASIO4All drivers should be low, but couldn't be confirmed by the author. Moreover additional latency was introduced since number of musicians were using WiFi connection.

Musicians' internet connection needed to meet certain criteria. The speed is not as crucial as the stability, and the connection latency. Most cable modems provided reasonable performance, but in at least one case a DSL connection had a prohibitively high latency (over 1 second for packets to travel from the musician to the server), preventing that musician from participating. While connection speed can be easily tested using various online services, connection stability and latency tests are not common and these parameters at the same time become apparent once Jamulus (or another low-latency system) connection is established.

The crucial part of musicians' setup is the ability to control individual mix of all the instruments. Musicians were encouraged to turn up the accompaniment/click track only, and turn down all other instruments.

Server requirements and limitation on the number of participants

To date, we had up to 16 musicians (including the conductor) participating in a single rehearsal. The two bottlenecks of the system are:

As far as the upload speed goes, we were so far using the "low" quality setting with "Mono" channel setup. For these settings a single stream takes about 300 kbps. A typical fast cable connection with 100 mbps download speed has an upload speed of 5 mbps. For reliability it should be assumed that only portion of that theoretical bandwidth is available, even when no other devices or services use that internet connection at the same time. Running video call on the same computer as the server process will greatly limit the bandwidth available for Jamulus. With the 5 mbps connection for the server, it should be possible to connect 8-12 clients with low quality mono signal, assuming that no other significant traffic is present on that connection. For the client machines, they only need to have upload speed to accommodate a single 300 kbps stream, plus the bandwidth of video connection (typically 500-1500 kbps)

By default, the server will accept up to 10 connections at a time. In order to increase this limit, the server needs to be started from the command line with the parameter -u or --numchannels specified at a higher number.

- CPU speed of the server

- upload speed of the server's internet connection

As far as the upload speed goes, we were so far using the "low" quality setting with "Mono" channel setup. For these settings a single stream takes about 300 kbps. A typical fast cable connection with 100 mbps download speed has an upload speed of 5 mbps. For reliability it should be assumed that only portion of that theoretical bandwidth is available, even when no other devices or services use that internet connection at the same time. Running video call on the same computer as the server process will greatly limit the bandwidth available for Jamulus. With the 5 mbps connection for the server, it should be possible to connect 8-12 clients with low quality mono signal, assuming that no other significant traffic is present on that connection. For the client machines, they only need to have upload speed to accommodate a single 300 kbps stream, plus the bandwidth of video connection (typically 500-1500 kbps)

By default, the server will accept up to 10 connections at a time. In order to increase this limit, the server needs to be started from the command line with the parameter -u or --numchannels specified at a higher number.

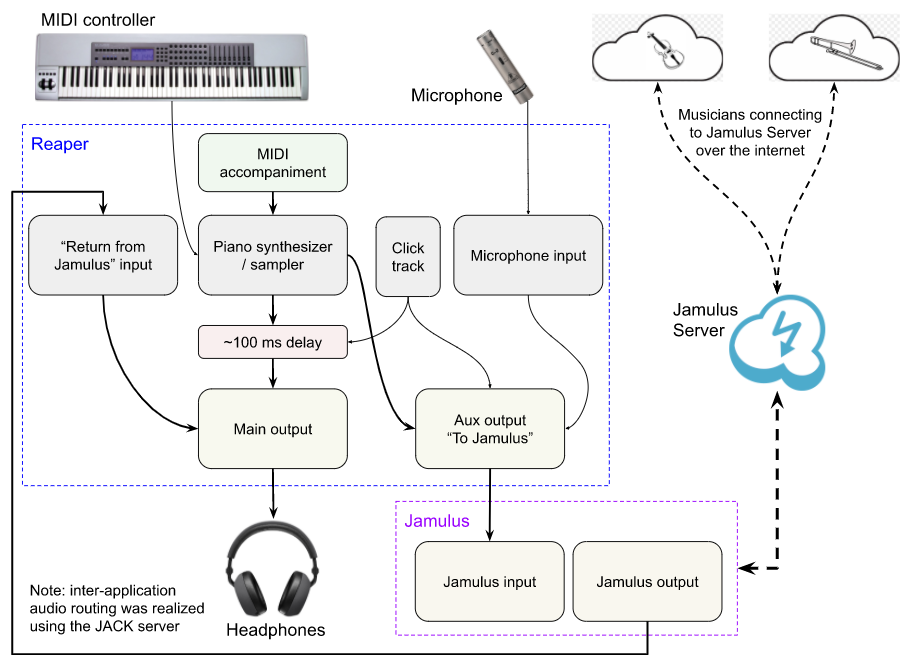

Routing

The following graph represents the audio routing between Reaper and Jamulus on the conductor's machine. Audio is being routed with the use of JACK server, which allows for inter-application audio routing. Reaper is used to mix all of the signals. The delay in the audio path going to the headphones aims to compensate for the delay of the audio traveling over the internet. The thicker solid lines represent path of "musical sound" -- both the accompaniment, as well as the parts played by the musicians remotely.

Preliminary results

In the current form, the described system allows for rehearsing with 15+ musicians. It is possible to conduct a rehearsal, fix mistakes etc., providing that a common timing reference is provided. The participants at the very least need to have a stable internet connection, a laptop/computer with built-in microphone, and wired headphones. The musicians' reception of this setup has been very positive so far.

The overall delay between musicians and the conductor has not been accurately tested yet. However, the local delay for the accompaniment, which was adjusted "by ear" to match the sound coming from the playing musicians, is now set at 110 ms. This suggests that the average "one way" latency between two computers in our setup is around 55 ms. This does roughly match the numbers reported by the musicians, as approximated by Jamulus (the "overall delay" parameter), which were in the range between 45 and 70 ms.

The overall delay between musicians and the conductor has not been accurately tested yet. However, the local delay for the accompaniment, which was adjusted "by ear" to match the sound coming from the playing musicians, is now set at 110 ms. This suggests that the average "one way" latency between two computers in our setup is around 55 ms. This does roughly match the numbers reported by the musicians, as approximated by Jamulus (the "overall delay" parameter), which were in the range between 45 and 70 ms.

Future work

In the future the author would like to fine tune the system to ensure that the lowest possible latency is being achieved, given the connection parameters. In order to achieve that, fine-tuning of ASIO4All settings should be performed, as well as getting the musicians to use a wired internet connection. Using another low-latency system, that does not use compression, could help driving the latency down, although it might be prohibitive either in terms of difficulty to setup, operate, or because of the bandwidth requirements. Finally, attempts to play without a click track is planned, providing that the latency can be brought further down.

Acknowledgements

The author would like to thank the Department of Digital Arts and Experimental Media (DXARTS) at the University of Washington for continuing support in all areas, members of Evergreen Community Orchestra for their involvement in the project, and to Steve Rodby, whose expertise in timing, as well as countless tests helped the author to develop this and other systems for distant collaboration.